INTELLIGENT SYSTEMS IN ACCOUNTING, FINANCE AND MANAGEMENTASSESSING PREDICTIVE PERFORMANCE OF ANN-BASED CLASSIFIERS Intell. Sys. Acc. Fin. Mgmt. 13, 217–250 (2005)

Published online in Wiley InterScience (www.interscience.wiley.com) DOI: 10.1002/isaf.269

ASSESSING THE PREDICTIVE PERFORMANCE OF

ARTIFICIAL NEURAL NETWORK-BASED CLASSIFIERS

BASED ON DIFFERENT DATA PREPROCESSING METHODS, DISTRIBUTIONS AND TRAINING MECHANISMS

ADRIAN COSTEA* AND IULIAN NASTAC

Turku Centre for Computer Science, Institute for Advanced Management Systems Research, Åbo Akademi University,

Turku, Finland

SUMMARY

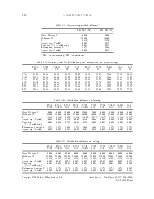

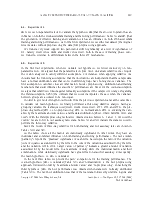

We analyse the implications of three different factors (preprocessing method, data distribution and training mechanism) on the classification performance of artificial neural networks (ANNs). We use three preprocessing approaches: no preprocessing, division by the maximum absolute values and normalization. We study the implications of input data distributions by using five datasets with different distributions: the real data, uniform, normal, logistic and Laplace distributions. We test two training mechanisms: one belonging to the gradientdescent techniques, improved by a retraining procedure, and the other is a genetic algorithm (GA), which is based on the principles of natural evolution. The results show statistically significant influences of all individual and combined factors on both training and testing performances. A major difference with other related studies is the fact that for both training mechanisms we train the network using as starting solution the one obtained when constructing the network architecture. In other words we use a hybrid approach by refining a previously obtained solution. We found that when the starting solution has relatively low accuracy rates (80–90%) the GA clearly outperformed the retraining procedure, whereas the difference was smaller to non-existent when the starting solution had relatively high accuracy rates (95–98%). As reported in other studies, we found little to no evidence of crossover operator influence on the GA performance. Copyright © 2005 John Wiley & Sons, Ltd.

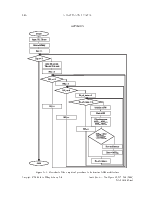

Predictive data mining has two different aims: (1) the uncovering of hidden relationships and patterns in the data, and (2) the construction of usable prediction models (Zupan et al., 2001). One type of prediction model is represented by classification models or models for predicting the relative positions of newly observed cases against what is known. Financial performance classification problems concern many business players: from investors to decision makers, from creditors to auditors. They are all interested in the financial performance of the company, what are its strengths and weaknesses, and how the decision process can be influenced so that poor financial performance or, worse, bankruptcy is avoided. Usually, the classification problem literature emphasizes binary classification, also known as two-group discriminant analysis problems, which is a simpler case of the classification problem. In the case of binary classifications everything is seen in black and white (e.g. a model which implements a binary classifier would just show a bankruptcy or a

![]()

* Correspondence to: A. Costea, Turku Centre for Computer Science, Institute for Advanced Management Systems Research, Åbo Akademi University, Lemminkäisenkatu 14 A, FIN-20520 Turku, Finland. E-mail: adrian.costea@abo.fi

![]() Copyright

© 2005 John Wiley & Sons, Ltd. . 13, 217–

Copyright

© 2005 John Wiley & Sons, Ltd. . 13, 217–250 (2005)

DOI: 10.1002/isaf

non-bankruptcy situation, giving no detailed information about the real problems of the company). Greater information would be obtained from the classification models if one particular business sector were to be divided into more than two financial performance classes (and it would be easier to analyse the companies placed in these classes). This study introduces an artificial neural network (ANN) trained using genetic algorithms (GAs) to help solve the multi-class classification problem. The predictive performance of the GA-based ANN will be compared with a retraining (RT)-based ANN, which is a new way of training an ANN based on its past training experience and weights reduction. Four different GA-based ANNs will be constructed based on four different crossover operators. At the same time, three different RT-based ANNs are used, depending on when the effective training and validation sets are generated (at which stage of the RT algorithm). The two different training mechanisms have something in common, which is the ANN architecture. A new empirical method to determine the proper ANN architecture is introduced. In this study, the solution (set of weights) determined when constructing the ANN architecture is refined by the two training mechanisms. The training mechanisms have an initial solution that is not randomly generated, as it is in the majority of the reported related studies (Schaffer et al., 1992; Sexton and Gupta, 2000; Sexton and Sikander, 2001; Pendharkar, 2002; Pendharkar and Rodger, 2004). Moreover, our study investigates the influence of data distributions and the preprocessing approach on the predictive performance of the models. Very few workers have studied the implications of data distributions on the predictive performance of ANNs; rather, combination with other factors has been studied, such as the size of the ANN (in terms of number of hidden neurons) and input data and weight noise (Pendharkar, 2002). Some studies have focused on the transformation of the input data to help increase the classification accuracy, as well as to improve the learning time. For example, Vafaie and DeJong (1998) proposed a system for feature selection and/or construction which can improve the performance of the classification techniques. They applied their system on an eye-detection facerecognition system, demonstrating substantially better classification rates than competing systems. Zupan et al. (1998) proposed function decomposition for feature transformation. Significantly better results were obtained in terms of accuracy rates when the input space was transformed using feature selection and/or construction. Few research papers studied different data preprocessing methods to help improve the ANN training. Koskivaara (2000) investigated the impact of four preprocessing techniques on the forecasting capability of ANNs when auditing financial accounts. The best results were achieved when the data were scaled either linearly or linearly on a yearly basis.

Уважаемый посетитель!

Чтобы распечатать файл, скачайте его (в формате Word).

Ссылка на скачивание - внизу страницы.